(- made by notebooklm with my input)

Default settings

About stdout

(- made by notebooklm with my input)

“Prompting is like briefing a highly talented, slightly literal intern.”

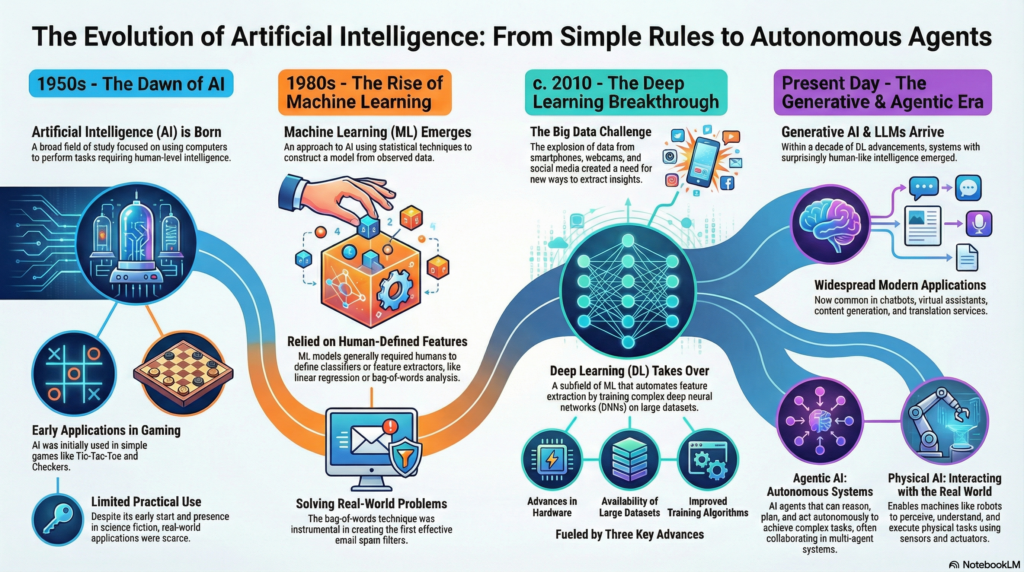

In reality, it’s the process of providing specific instructions to a GenAI tool (like ChatGPT, Gemini, or Claude) to receive new information or achieve a specific outcome. Good prompting is more than just a command, it’s a blend of context, constraint, and conversation.

The Bad Prompt: “Give me a chicken recipe.”

The Good Prompt: “Act as a resourceful home cook. I need a dinner recipe using chicken breast, spinach, and heavy cream.

Here is your context:

I only have 30 minutes to cook.

I want to use only one pan because I hate doing dishes.

I only have the basics like salt, pepper, oil, and garlic.

Give me a simple, step-by-step recipe with the following constraints:

Use the metric system.

No fancy equipment.

Keep instructions short and concise.

Suggest one side dish that goes well with it.”

When you’re stuck, check your prompt against these five letters:

C — Context: Does the AI know why I’m asking this?

R — Role: Did I tell it who to be? (Expert, friend, critic?)

E — Exclusions: Did I tell it what not to include?

A — Audience: Does it know who is reading the output?

F — Format: Do I want a table, a list, an email, or a poem?

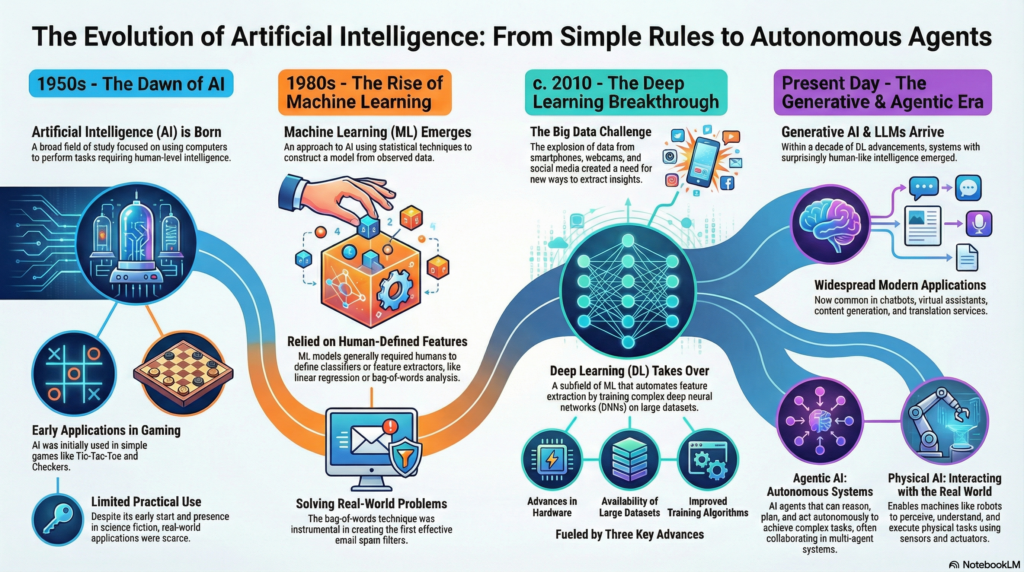

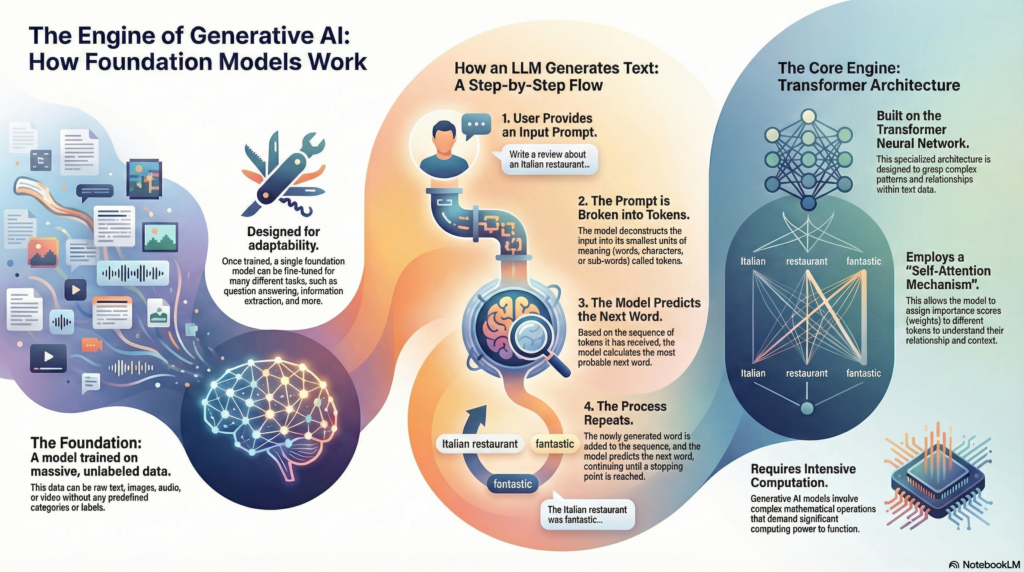

GenAI doesn’t know stuff the way we do. It predicts the next most likely word in a sequence, much like an auto-complete feature. This is why Context is so important. You are narrowing the probability of which words it chooses so they match your intent.

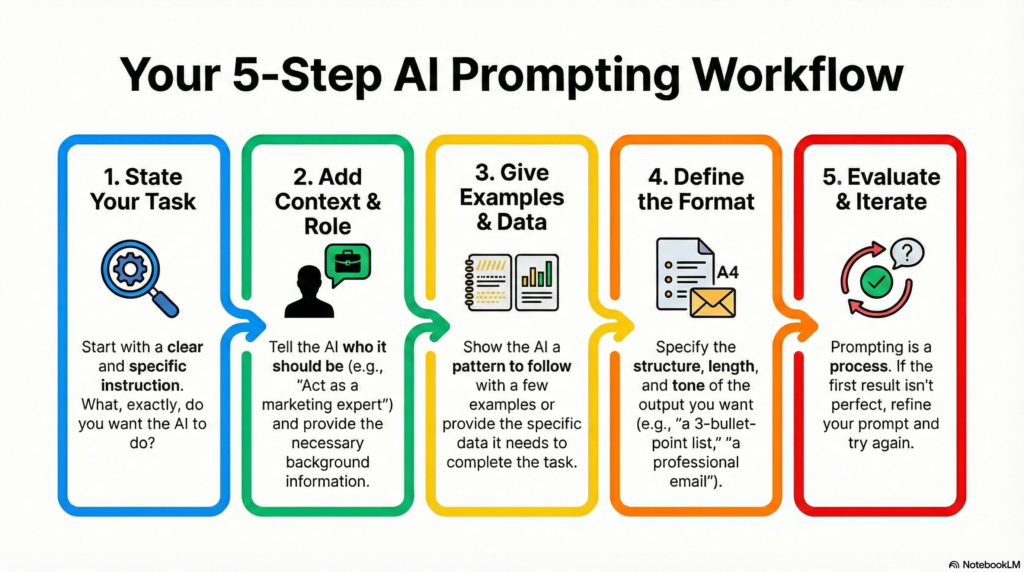

1. Tokenisation. Computers read numbers, not letters. Your prompt is broken into “tokens.” For example, “Tokenisation is fun” becomes Token + isation + is + fun + !. These are then converted into numeric IDs like [34502, 421, 328, …].

2. Embeddings. The AI looks these numbers up on a massive multi-dimensional map. In this map, similar words are physically closer to each other. “Apple” is near “Pear,” but far from “House.”

Imagine a 3D map where:

Up/Down: How formal the word is.

Left/Right: How “technical” the word is.

Forward/Backward: How “happy” the word is.

3. The Transformer & Attention. The AI calculates how much “attention” to pay to each word. In the sentence “The bank was closed because the river flooded,” the attention mechanism realises “bank” refers to a river bank, not a financial one, because it saw the word “flooded” nearby.

4. Token Prediction. This is the “guessing game.” The AI writes one token at a time by looking at your prompt plus what it has already written, calculating the probability of the next word, and repeating the loop until the thought is finished.

The fact is, GenAI does not THINK or WRITE like we do. It is a probability machine.

Because it prioritizes looking correct over being factually true, it can “hallucinate.” If a fake name or a made-up date sounds mathematically plausible in a sentence, the AI will print it confidently. It isn’t lying, it’s just following the math of what word usually follows another.

Knowing how the gears turn make us better users. It’s time to get our prompts in order.

a digital corner built with a slower frequency in mind.

no content strategy.

a place for the “in-between” facts & thoughts.